HW8

HW8

任务介绍

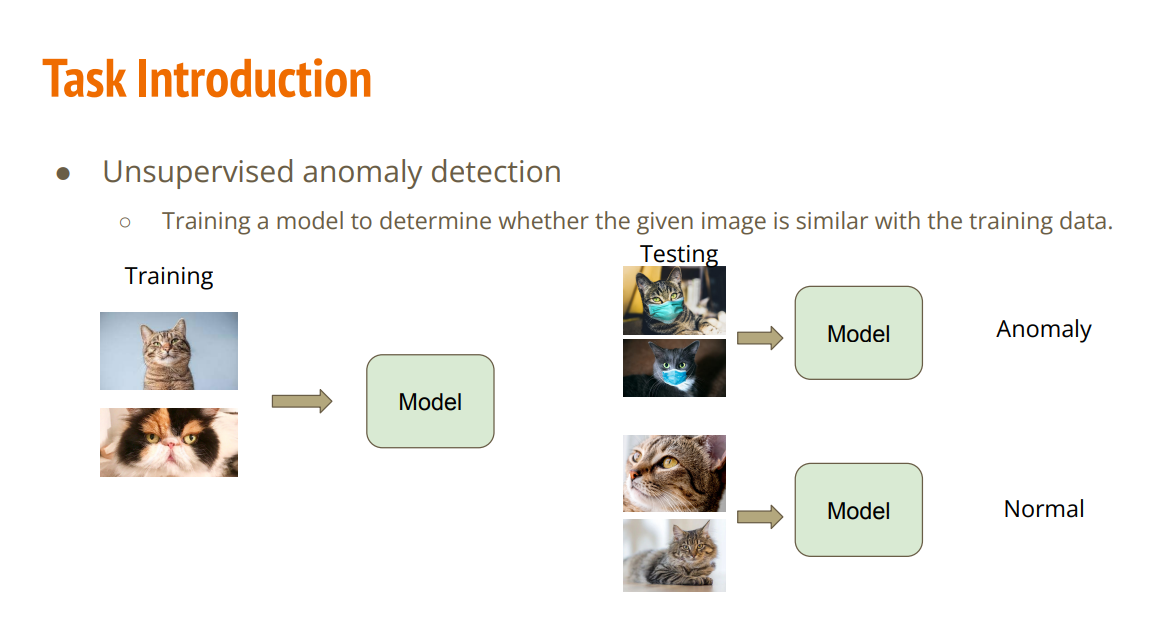

无监督异常检测:Anomaly Detection异常图片的识别,输入一张图片,判别这张图片时异常还是正常

baseline

| 难易程度 | 精确度 |

|---|---|

| simple | AUC >= 0.52970 |

| medium | AUC >= 0.72895 |

| strong | AUC >= 0.77196 |

| boss | AUC >= 0.79506 |

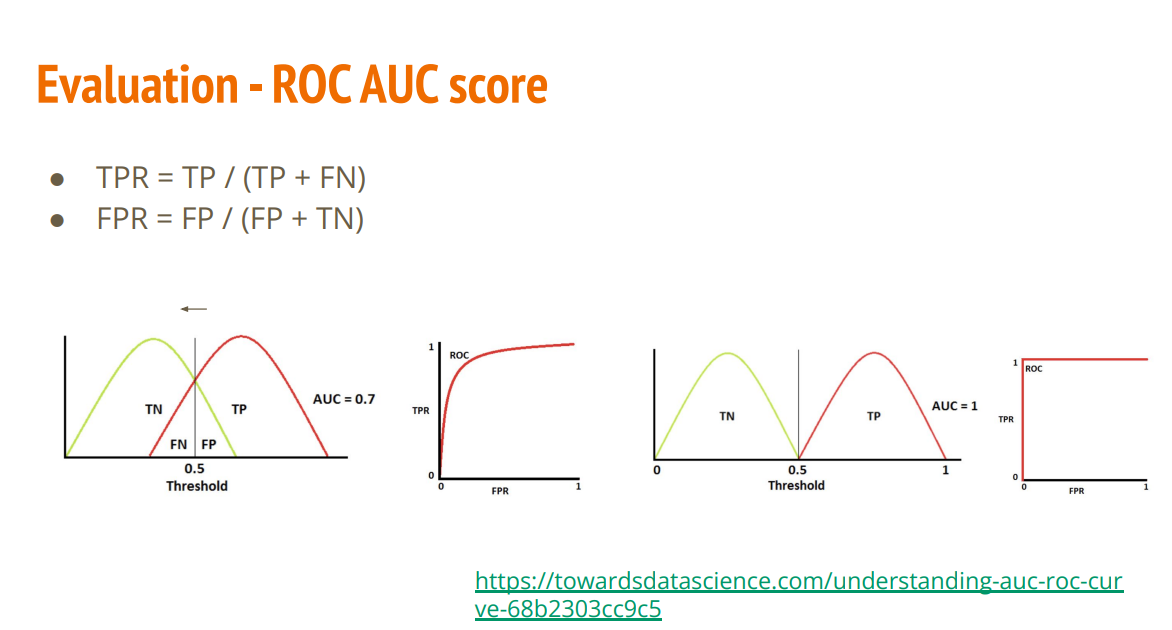

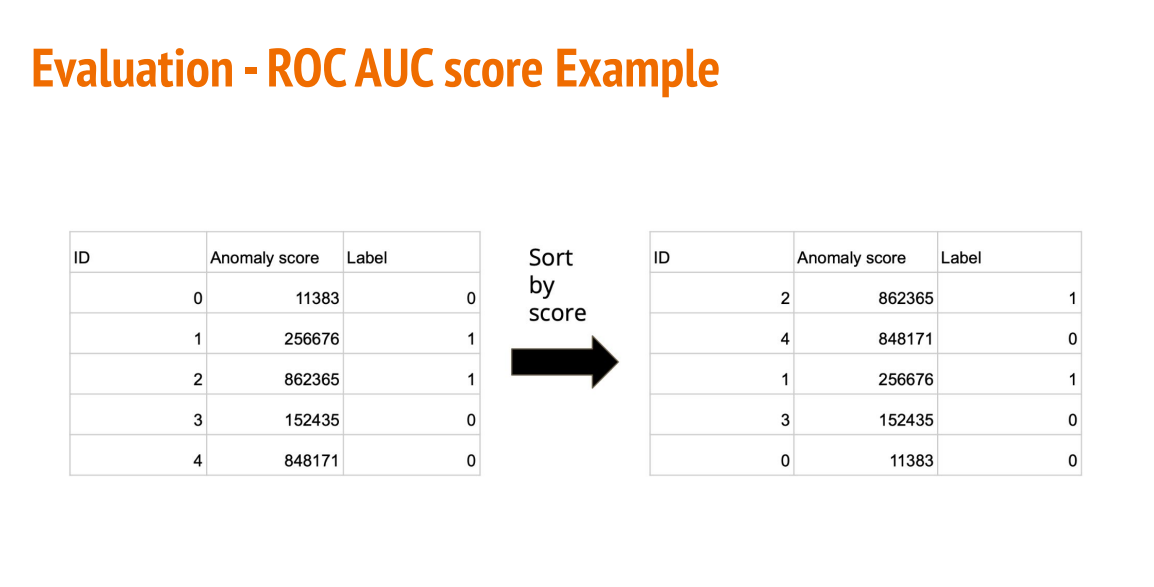

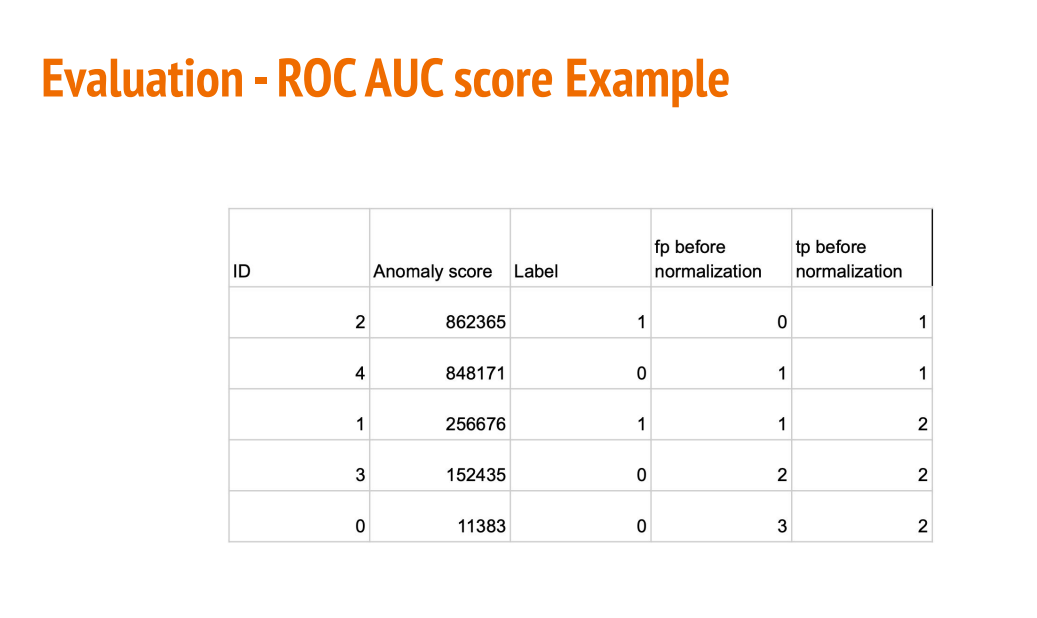

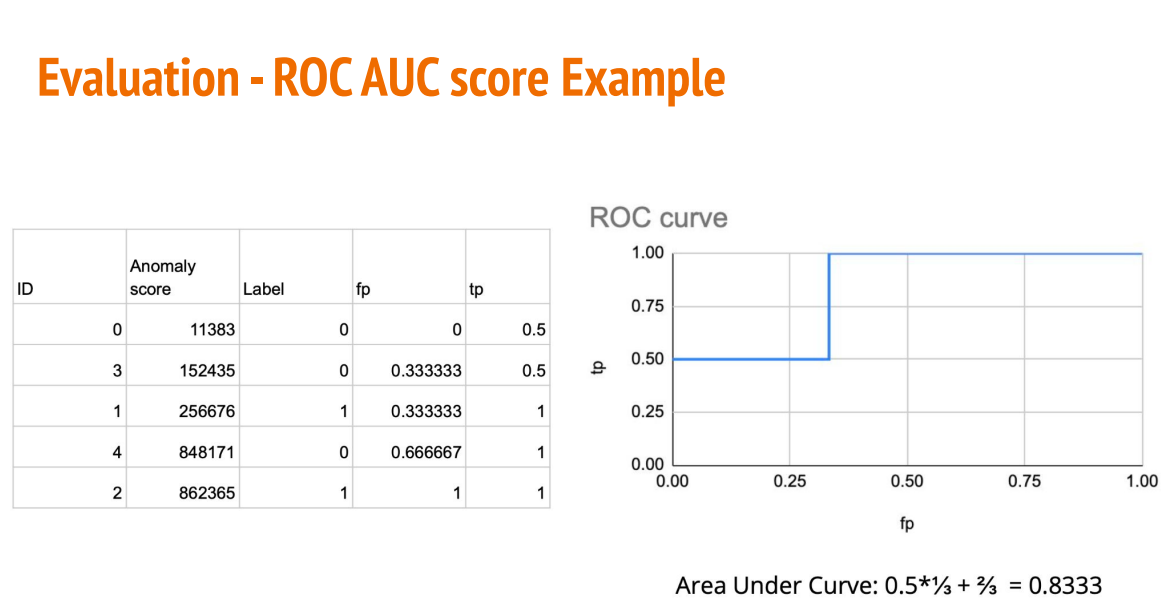

AUC(Area Under Curve)被定义为ROC曲线下与坐标轴围成的面积,显然这个面积的数值不会大于1。又由于ROC曲线一般都处于y=x这条直线的上方,所以AUC的取值范围在0.5和1之间。AUC越接近1.0,检测方法真实性越高;等于0.5时,则真实性最低,无应用价值。

初始代码 Score: 0.52966

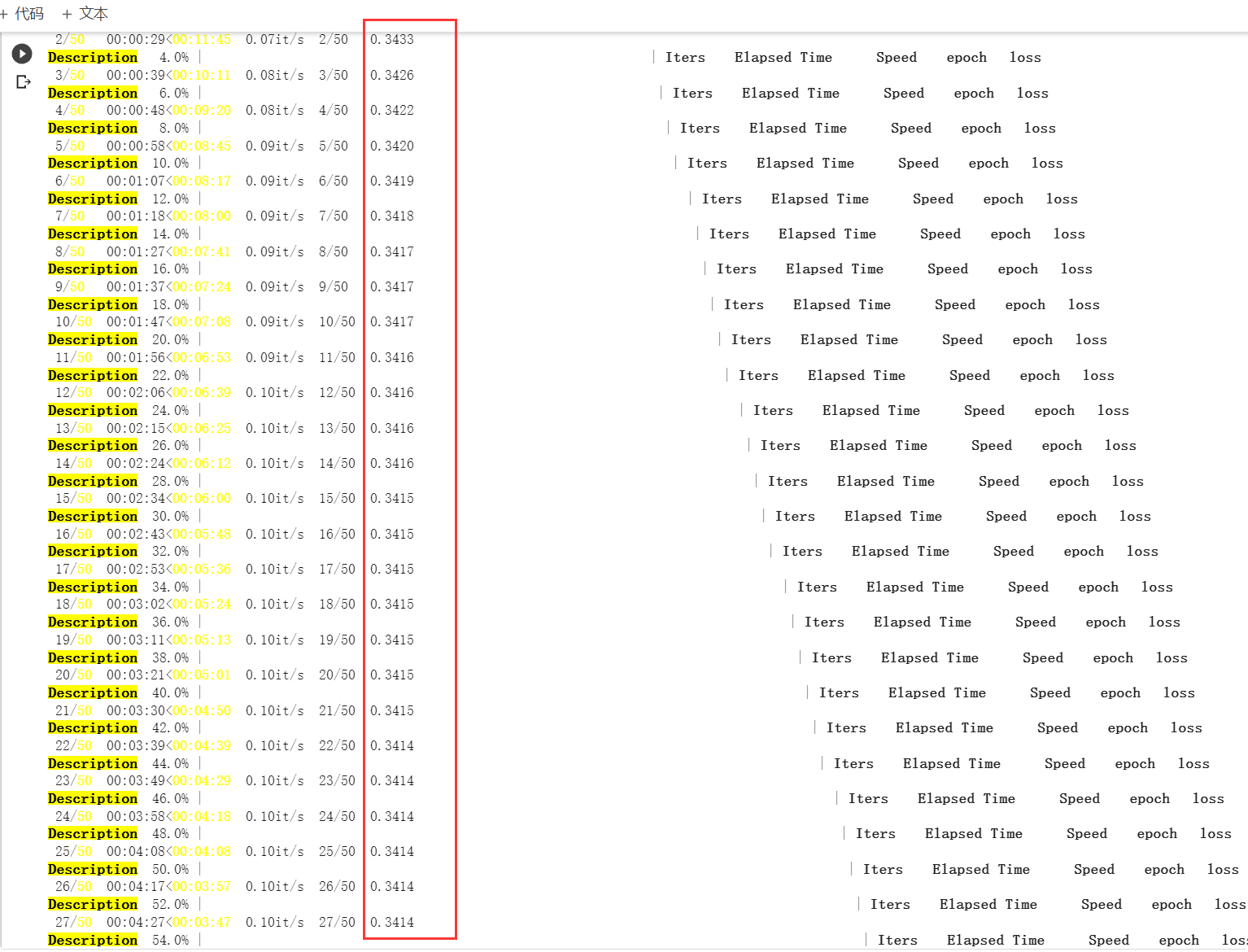

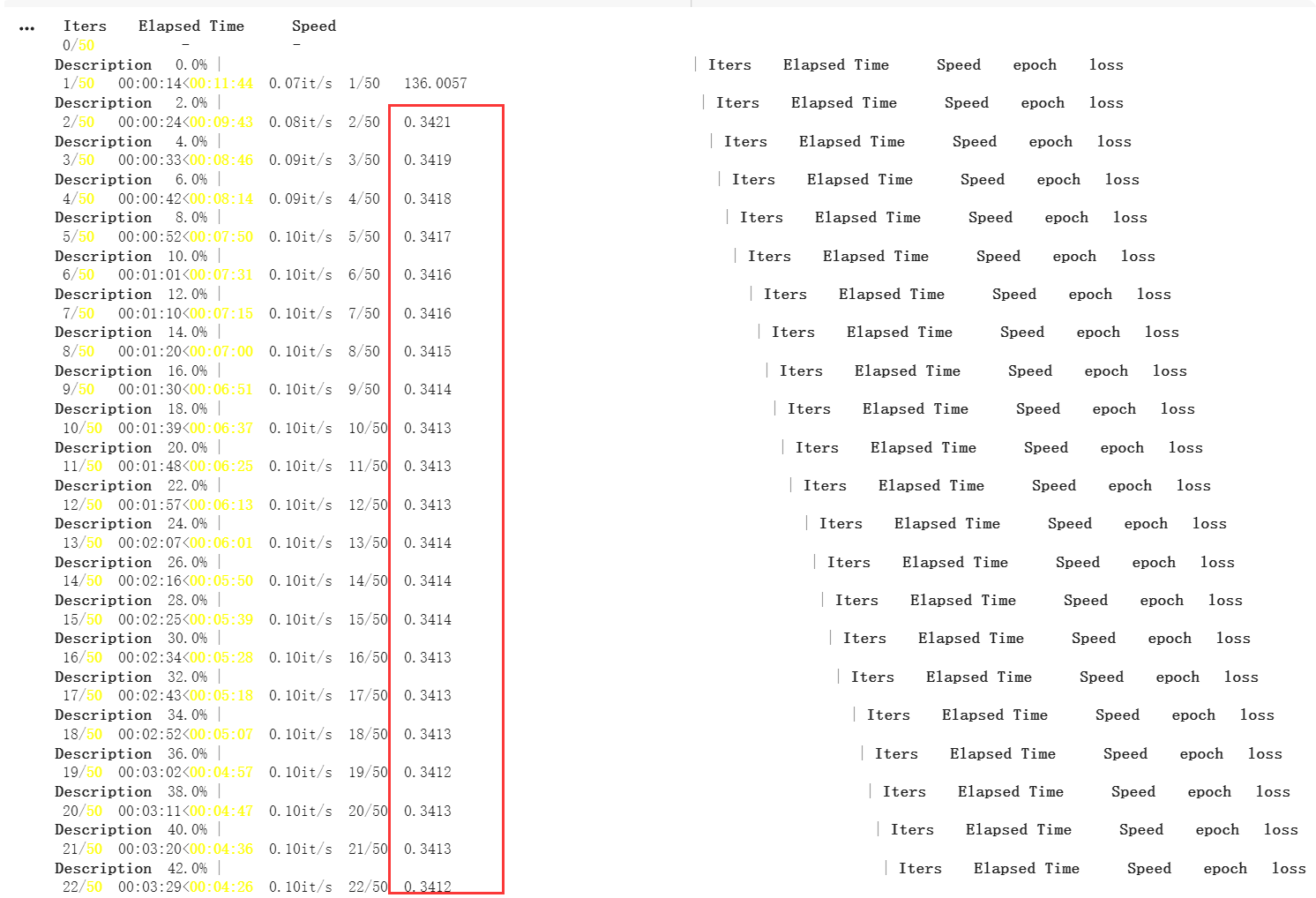

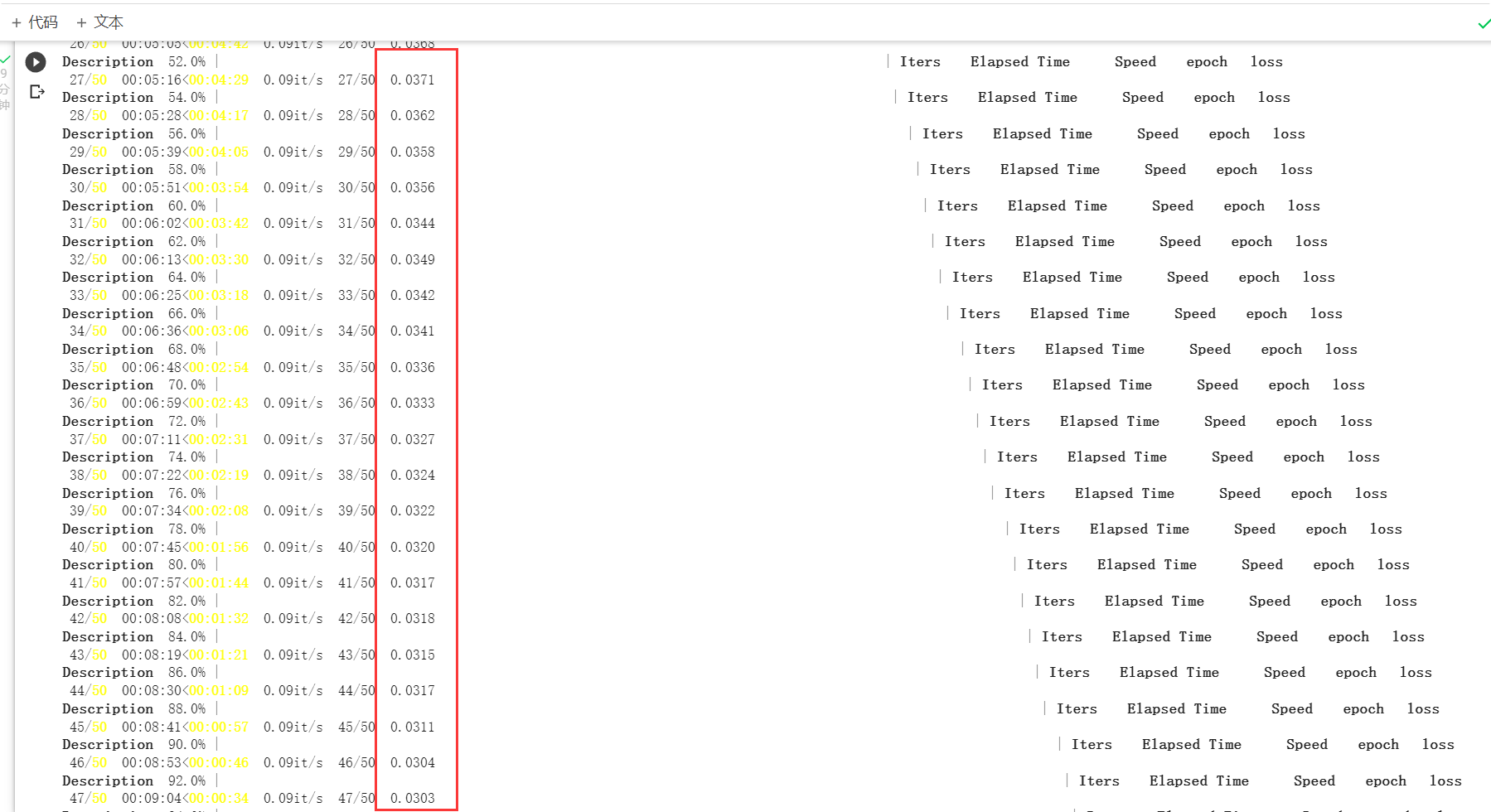

loss较小且下降太慢

调大学习率,显然学习率不是主要矛盾,查看模型,发现提供了三个模型

1 | class fcn_autoencoder(nn.Module): |

1 | class conv_autoencoder(nn.Module): |

1 | class VAE(nn.Module): |

Medium Baseline

选用fcn_autoencoder模型,结果有个很大的提升,同时也能看到loss初始很低

修改fcn层数,增加特征表示的向量

为什么采用fcn?

- CNN 的输入是图像,输出是一个结果,或者说是一个值,一个概率值。

- FCN输入是一张图片,输出也是一张图片,学习像素到像素的映射。

1 | class fcn_autoencoder(nn.Module): |

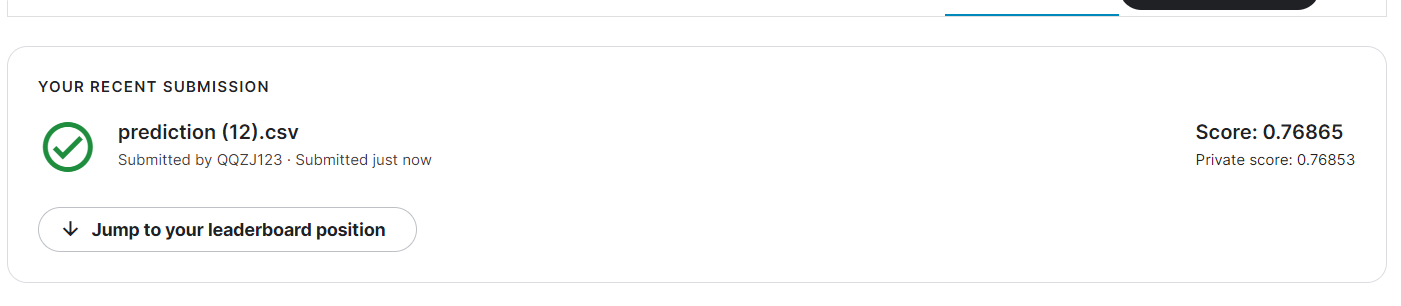

修改层数,分数继续提高 Score: 0.76865

从loss来看,感觉这个模型优化也无法再继续了

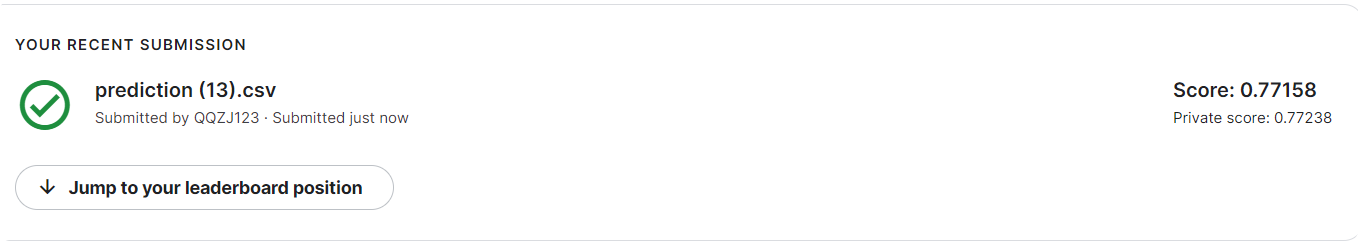

Strong Baseline

从fcn模型的loss来看,感觉得换个模型,采用ResNet模型

为什么采用ResNet?

更深层的网络可能会出现梯度爆炸/消失,会带来更错误的答案。图像本身局部相关,处理图像分类的问题上梯度也局部相关,如果梯度接近白噪声,那梯度更新可能根本就是在做随机扰动。而resnet在保持梯度相关性方面很优秀。

1 | class ensemble(nn.Module): |

1 | class Residual_Block(nn.Module): |

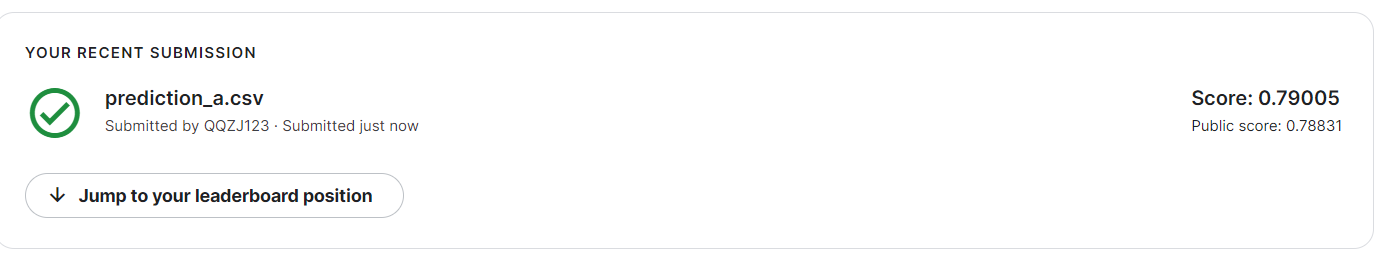

Boss Baseline (Acc>0.79506)

ResNet模型基础上引入辅助网络是为了将低分辨率的编码特征图映射成输入分辨率的特征图来完成逐像素的分类

使用了额外的一个decoder辅助网络,decoder网络的损失函数受resnet控制,结果也比resnet更强

1 | class Auxiliary(nn.Module): |

- 标题: HW8

- 作者: moye

- 创建于 : 2022-08-16 15:01:08

- 更新于 : 2025-12-12 18:22:53

- 链接: https://www.kanes.top/2022/08/16/HW8/

- 版权声明: 本文章采用 CC BY-NC-SA 4.0 进行许可。

评论